How to Write Effective AI Prompts

Is your ChatGPT output bland, uninspired, or just off-topic? Fix your prompt! #

Remember this AI truism: garbage in, garbage out.

What is an AI prompt anyways? #

Writing effective prompts for ChatGPT is crucial for harnessing its full potential. A prompt is essentially your instruction to the AI, guiding it on what to generate. The precision of your input directly influences the quality of the output of ChatGPT’s and other AIs.

Make sure you check out this tutorial as well.

Why do I need a good AI prompt? #

Many new users tend to input vague, brief statements, expecting detailed responses. However, generative AI operates on a ‘garbage-in, garbage-out’ principle. A poorly structured prompt often leads to unexpected or unsatisfactory results. That’s where the art of prompt engineering comes in – it’s about meticulously crafting your request to ensure clarity and specificity.

This precision in prompt crafting is why tools like AIPRM and their communities are invaluable. They offer well-thought-out prompts, helping users quickly and effectively communicate their needs to the AI. Investing time in crafting a clear, detailed prompt pays off with more accurate and useful responses, turning ChatGPT into a more powerful tool for your needs.

What is Prompt Engineering? #

Prompt engineering transforms how ChatGPT responds, tailoring its output to mimic specific characters or tones. Your example perfectly illustrates this. By instructing ChatGPT to “Act as Logan Roy from the TV show Succession,” you set a clear context and personality for the response. The prompt is concise yet detailed, directing the AI to respond as Logan would to a specific situation – his children going bankrupt.

The result?

A response that captures Logan Roy’s essence – terse, authoritative, and laced with the character’s signature disdain and resilience. It’s distinctly different from your own voice, showcasing the power of well-crafted prompts in shaping ChatGPT’s output to suit varied scenarios and styles. This approach is key for anyone looking to get the most out of their interactions with generative AI tools.

What is the Context Window? AI Model Memory. #

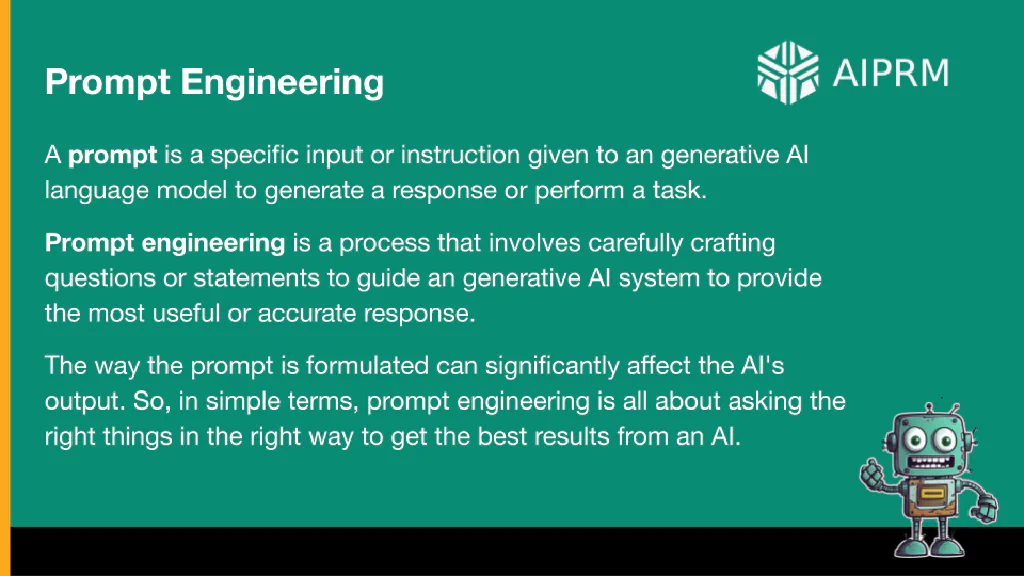

Understanding the context window in an AI model, like GPT-4, is crucial for effective communication with the AI. This ‘context window’ is essentially the AI’s short-term memory, encompassing the ongoing dialogue between the user and the tool.

GPT-4’s context window is impressively large, capable of retaining up to 96,000 words or 128,000 tokens on the largest model, roughly equivalent to 400 pages of text. Other current AI models go up to 200,000 tokens and when you read this, a new model may be out, even bigger.

This expansive memory allows it to reference a vast amount of information from earlier in the conversation, which significantly influences its responses.

The importance of this lies in the continuity and relevance of the AI’s responses. As you switch topics within the same chat session, everything previously discussed shapes the responses you receive. This can be advantageous for complex, multi-faceted conversations but can also lead to confusion if topics are wildly divergent.

To optimize the use of GPT-4 and similar tools, it’s advisable to start new chat sessions when embarking on entirely new topics. This approach ensures that the context window is clean and focused, allowing for more accurate and relevant responses from the AI, tailored to the specific topic at hand.

Literal Understanding of Language in LLMs #

Precision in your choice of words is paramount when crafting prompts for ChatGPT, much like communicating with Amelia Bedelia, the literal-minded book character. This specificity is key in guiding the AI to your intended outcome.

- Verbs: Choose them carefully. Instead of a general term like “rewrite,” opt for “condense” if you want a summary, or “extrapolate” to expand on the information. This specificity directs ChatGPT’s approach more accurately.

- Adjectives: These are crucial, especially when setting the tone and voice of your content. Be descriptive and clear about the style or emotion you want to convey.

- Entities (People, Places, Things): To avoid confusion, it’s a good practice to put names and specific entities in quotes. For instance, saying “Logan Roy” rather than just Logan or Roy clarifies that you’re referring to the character from “Succession,” not someone else or something else like Roy Rogers.

- Specificity: The more detailed and precise your prompt, the more accurate and relevant the response. Ambiguity can lead to creative but potentially off-target answers. If you seek a specific type of response or information, make sure your prompt leaves no room for misinterpretation.

Remember, ChatGPT, like Amelia Bedelia, interprets prompts literally. Carefully chosen words help ensure that the responses you receive align closely with your intentions.

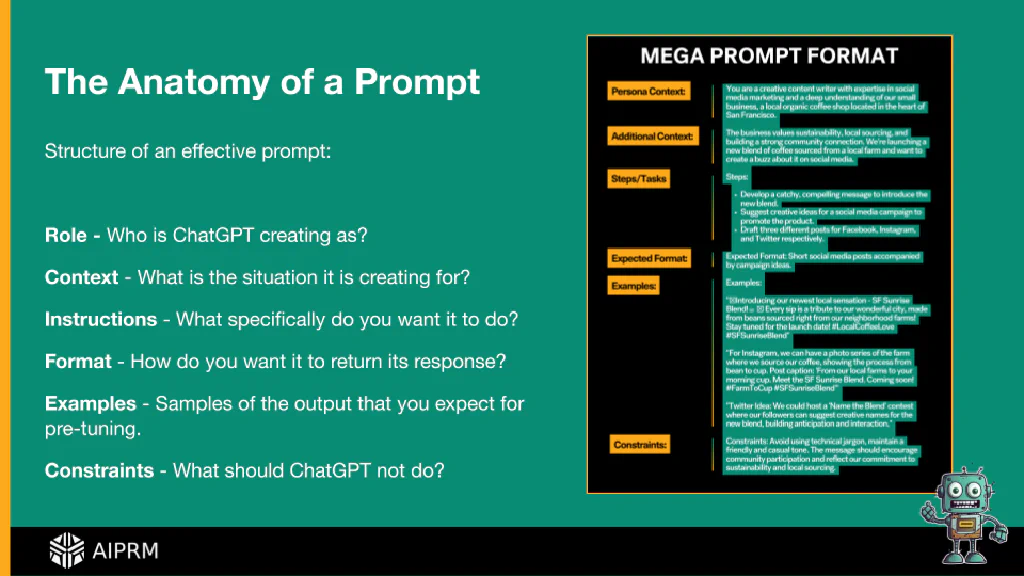

The Anatomy of an AI Prompt #

Now, the anatomy of a prompt, or at least the light, the sort of prompts that we do here at AIPRM and a lot of the people in our community do as well, is effectively like this. And the order of these items doesn’t really matter. You just want to make sure that it makes sense when you’re writing them to ChatGPT.

- So the role, who is the GPT creating ads?

- Is it a person, is it a specific type of profession?

Just be very specific about that. That’s going to limit the context of its response.

Then there’s just general context.

- Where is this person?

- What are they writing for?

- Are they writing a book?

- Is it a plumber that’s at someone’s home talking about toilets being clogged?

Pretend you’re talking to a person #

Whatever it is, give the context for that person that’s meant to be responding.

The instructions:

- What do you want them to do?

- Do you want them to write a specific thing in a specific order?

- Do you want them to have specific headings in the content that they’re giving you?

- Do you want them to write large paragraphs, short paragraphs?

- Do you want them to account for things like perplexity so that you can trick an AI detector?

All those instructions need to be baked in there.

The format: that’s also going to inform what it responds with.

- Is it meant to be a tweet?

- Is it meant to be a chapter in a book?

- Is it meant to be a blog post?

Whatever format you’re thinking about, be very specific there and then that’s going to limit how many words it responds with or expand the number of words that it responds with.

Ideally, if you have examples, provide those because it can mimic very specifically what it is that you are wanting if you show it to them. Not them, but to it. It’s not a requirement, but again, it’s the quickest way to get to what you want.

Finally, any constraints: If you don’t want it to use technical jargon, if you want it to use a specific voice or tone, if you want it to be a specific type of message, make sure that you put that in the constraints.

As you write your prompts using this format, it’s going to become second nature.

Best Practice in AI Prompting #

Starting your prompts with a specific role like “New York Times bestselling author” is a smart strategy.

This sets a high standard for the quality of writing you expect from ChatGPT. It signals that you’re looking for responses that are not just well-written but also carry a certain prestige and expertise in the craft.

Adding specific requirements, like asking for “a lot of technical detail,” helps further refine the response. It narrows down ChatGPT’s focus to meet your precise needs, ensuring the output aligns closely with your expectations in terms of depth and complexity.

This approach is effective in avoiding generic or superficial responses, guiding the AI towards producing content that meets professional standards and specific criteria.

What do you think? #

And that wraps up our discussion on effective prompt crafting. Remember, if you’re not yet using AIPRM to enhance your experience with AIs like ChatGPT, it’s worth exploring, especially given its free access.

For additional support or feedback on your prompts, the AIPRM community forum is a valuable resource.

Don’t forget to check out forum.aiprm.com for more insights and interactions.

Table of Contents

Use the Cheat Code for AI today. Install AIPRM for free.

Only a few clicks until you also experience the AIPRM-moment in your AI usage!

Popular AI Prompts

BNI Education Segment Generation

Marketing PromptsCreate A BNI styled networking education script of 2-4 minutes on …

General Culture Quiz Game 2.7 Beta

Games PromptsGeneral culture quiz game with a charismatic japanese girl. Select the …

SEO-Optimized Articles in Hindi

Writing PromptsGet quality content for your blog, crafted by experts to boost your …

Get Personalized Insights

Accounting PromptsReceive tailored recommendations and insights based on your unique …

As Seen On

Computer Woche DE

Techopedia

Zapier

Seeking Alpha

Liverpool Echo UK

Daily Record UK

Mirror UK

ZDNet DE

The US Sun

What Our Users Say

Helped me to greatly improve my proposals

"I'm Jesiel, I work with IT, I saw and discovered AIPRM on the internet, it greatly improved my texts and proposals, I really like the answers”

Very good!

It has ChatGPT prompts for nearly anything you can imagine

"I am a serial entrepreneur specializing in SMTP infrastructure, AKA Email Marketing Software and services. I was told about AIPRM by a colleague. This is an amazing extension! It has ChatGPT prompts for nearly anything you can imagine with new ones being added all of the time.”