50+ Must-Know Statistics on Bias in AI for 2025/26

The accelerated development of AI since late 2022 has brought with it countless possibilities for people, businesses, and industries worldwide. However, AI adoption has also magnified certain risks and limitations.

Bias in AI has long been acknowledged as a potential issue of artificial intelligence, with the technology’s reliance on human information potentially resulting in subjective output relating to issues like race, gender, politics, age, and healthcare.

But how common is bias in AI, and what can be done to ensure the technology remains a force for good? To find out more, AIPRM collated the most relevant AI bias statistics, covering gender bias in AI, ChatGPT bias, and how to reduce bias in AI.

Examples of Bias in AI #

- Over 83% of neuroimaging-based AI models designed for psychiatric diagnosis were considered to have a high risk of bias.

- 34% of marketers claim generative AI sometimes produces biased information.

- ChatGPT agreed with over 70% of political statements with green leanings, compared to around 55% of conservative statements.

- Women’s healthcare issues were more likely to be downplayed than men’s among certain large language models (LLMs).

- AI tools are more likely to favor male names than female names when ranking job resumes.

- AI recruitment tools are 30% more likely to filter out candidates over 40, compared to younger candidates with the same qualifications.

AI Trust & Regulation: What the Data Shows #

- 66% of US adults are highly concerned about people getting inaccurate information from AI.

- Nearly 6 in 10 US adults believe AI regulation won’t go far enough.

- 56% of AI experts at colleges or universities have “no” or “not too much” confidence that the US government will regulate the use of AI effectively.

- Conversely, 21% of people in the US believe AI regulation will go too far.

Does AI have bias? #

Research from the University of South Carolina studied two AI databases (ConceptNet and GenericsKB) to analyze the fairness of their output and identify instances of bias. The study identified bias in 3.4% of ConceptNet’s data and nearly two-fifths (38.6%) of the output from GenericsKB.

The issues identified included both positive and negative biases on numerous issues, such as gender, race, nationality, religion, and occupation. Some of the most alarming biases and stereotypes identified across the data sets included:

- Women being viewed more negatively than men

- Muslims being associated with terrorism

- Mexicans being associated with poverty

- Policemen being associated with death

- Priests being associated with pedophilia

- Lawyers being associated with dishonesty.

It’s worth noting, however, that this USC study was released in May 2022, before the release of ChatGPT and the subsequent AI boom. Since then, there have been significant technological steps taken and government legislation invoked to reduce bias in AI.

In February 2025, OpenAI announced it would combat bias in AI with a new set of measures applied across all of its products (including ChatGPT). This was followed by the release of President Donald Trump’s “America’s AI Action Plan” in July 2025, which promised to build data center infrastructure while eradicating ideological bias.

How common is bias in AI? #

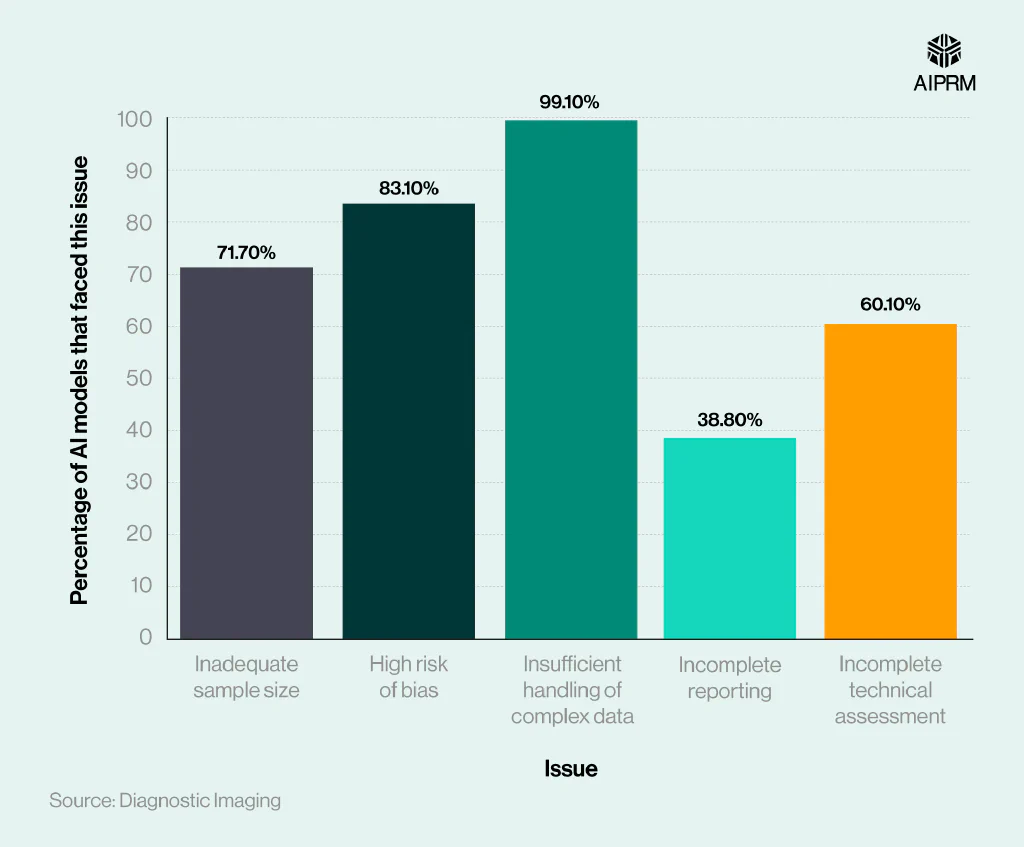

Over four in five (83.1%) of neuroimaging-based AI models were considered to have a high risk of bias, according to an article published by Diagnostic Imaging. The data (originally from JAMA Network Open) assessed 555 neuroimaging models used for detecting psychiatric disorders. The study also found that 71.7% of the models used inadequate sample sizes and 38.8% had incomplete reporting.

The prevalence of data issues found in neuroimaging-based AI models #

Almost all (99.1%) of the models included were considered lacking in their ability to handle complex data. However, this report was published in 2023 and therefore does not account for the significant technological advancements made in the subsequent years.

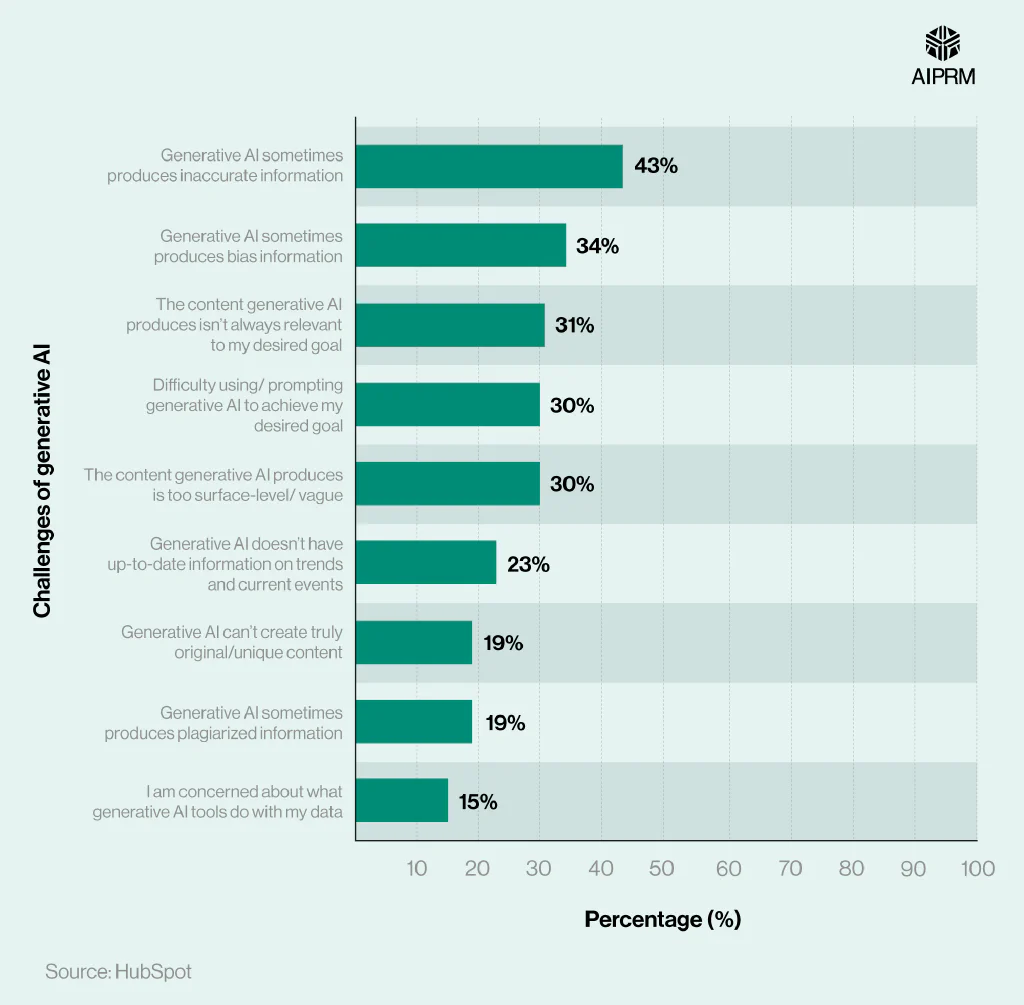

The most common challenges of generative AI among marketers #

A study on AI Trends for Marketers by Hubspot found that 34% of marketers say generative AI sometimes produces biased information. This was the second-most common issue identified, with 43% citing inaccurate data as the most common challenge.

At the other end of the scale, just 15% expressed concerns around how their data is used by generative AI, with 19% highlighting plagiarism and unoriginal content as concerns.

ChatGPT bias statistics #

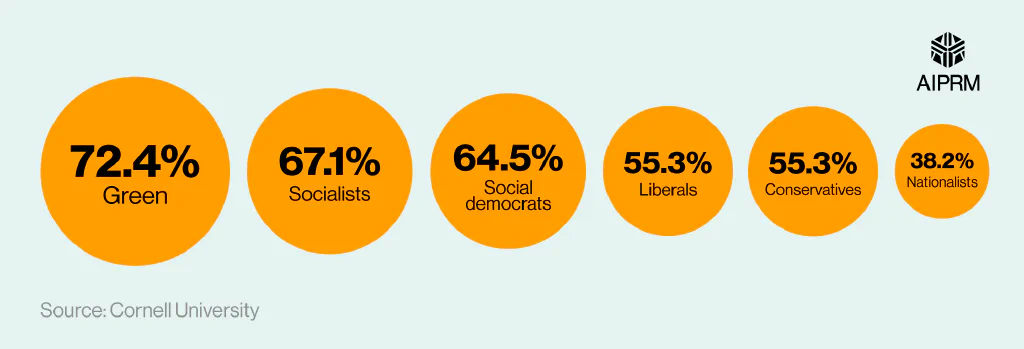

Bias in AI statistics from Cornell University found that ChatGPT was more likely to display left-wing political beliefs. The study entered a range of common policies and views displayed by prominent political ideologies and collated how many the AI tool agreed with.

Green political views were most likely to be endorsed by ChatGPT, with over seven in 10 (72.4%) being endorsed by the AI tool. This was over five percentage points more than any other ideology, with socialist views (67.1%) the only belief to score higher than two-thirds.

The agreement rate of ChatGPT by political ideology #

Social democrats had the third-highest percentage of agreement with statements, with a score of 64.5% – over 9 percentage points more than both conservatives and liberals.

Nationalist views were the only ones with an agreement rate below half, with a score of 38.2%, over 17 percentage points fewer than conservatives, and just over half the total for greens.

Explore the most important ChatGPT statistics and learn about the latest developments in one of generative AI’s leading tools.

Gender bias in AI statistics #

Research from the University of Washington in October 2024 found significant gender and racial bias in three large language models (LLMs) when ranking job resumes.

The study varied CVs by name, including 120 different names traditionally associated with White or Black men and women. They then employed three prominent LLMs (Salesforce AI, Mistral AI, and Contextual AI) to rank the resumes of applicants for over 500 real-life job listings.

The systems were found to favor male names in 52% of cases, compared to just 11% for females. These differences were even more pronounced when comparing race, with traditional Black male names never preferred over names typically associated with White men.

However, these trends reversed when comparing traditional Black female names with Black male names, with the systems preferring female names 67% of the time, compared to just 15% for males.

Elsewhere, a 2025 AI in healthcare study found gender disparities in prominent LLMs around symptom evaluation, with women’s physical and mental health issues more likely to be downplayed than men’s in certain models.

The findings led Dr. Sam Rickman, the lead report author and researcher at the London School of Economics and Political Science (LSE), to state that AI could lead to “unequal care provision for women.”

Explore key AI in healthcare statistics and learn about the impact artificial intelligence is having in the medical sector.

Racial bias in AI statistics #

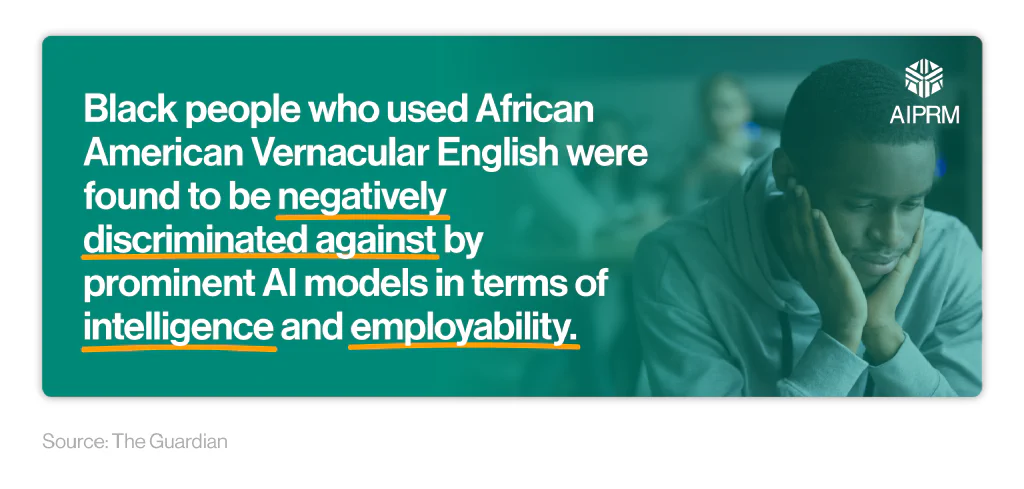

A 2024 article from The Guardian claimed that prominent AI tools such as ChatGPT and Gemini were found to discriminate against those who speak in African American Vernacular English (AAVE), an English dialect typically spoken by sections of Black Americans.

The report was based on a Cornell University study that asked prominent AI tools to assess the intelligence and employability of people who speak using AAVE, compared to standard American English.

The paper found that Black people using AAVE in speech were more likely to be described as “stupid” or “lazy” by the AI tools and assigned lower-paying jobs.

The issue of racial bias in AI has also been observed in healthcare, with a report from Science Direct analyzing the output of a prominent AI algorithm within the healthcare sector.

The study found that Black patients who were assigned a given risk level by the algorithm were typically sicker than White patients with the same score. The authors of the study estimated that this more than halves the number of Black patients recommended for extra care, creating a racial healthcare disparity.

The primary reason for the algorithm bias is attributed to healthcare costs being used as a metric for assessing health needs. As less money is spent on Black patients who have the same level of need, the algorithm mistakenly assumes that these patients are less sick than white patients. As such, eliminating cost as a determinant for healthcare needs is recommended as a way to reduce racial bias.

Political bias in AI statistics #

AI bias statistics collated by Harvard Data Science found that ChatGPT favored left-leaning viewpoints in 14 out of 15 political orientation tests, with significant bias displayed toward parties such as the Democrats in the US, Labour in the UK, and Lula in Brazil.

However, a 2025 report from Humanities and Social Science Communications suggests this trend may be reversing. The study asked various models of ChatGPT 62 questions on the Political Compass Test – an online questionnaire that assigns users a place on the political spectrum based on their answers.

After asking each question over 3,000 times to the various models, the study found a significant shift to right-wing leanings among newer models, compared to older versions of ChatGPT. However, the report still describes ChatGPT as having predominantly ‘libertarian left’ values.

Age bias in AI statistics #

A study on AI bias based on Age conducted by Next Up found that AI recruitment tools are 30% more likely to filter out candidates over 40, compared to younger candidates with identical qualifications. Additionally, a study from the University of California found that job applications from older candidates receive 50% fewer callbacks than younger candidates with identical CVs.

With the American Association of Retired Persons (AARP) finding that 78% of workers over 50 have experienced ageism in the workplace, these findings have sparked fear that AI’s limitations could accentuate ageism in the workplace.

Public perception towards bias in AI #

A 2025 report from the Pew Research Center found that two-thirds (66%) of US adults are highly concerned about people getting inaccurate information from AI, with this figure rising to 70% among AI experts.

Biased decisions made by AI were another highly referenced fear, with 55% of both the public and AI experts saying they’re highly concerned.

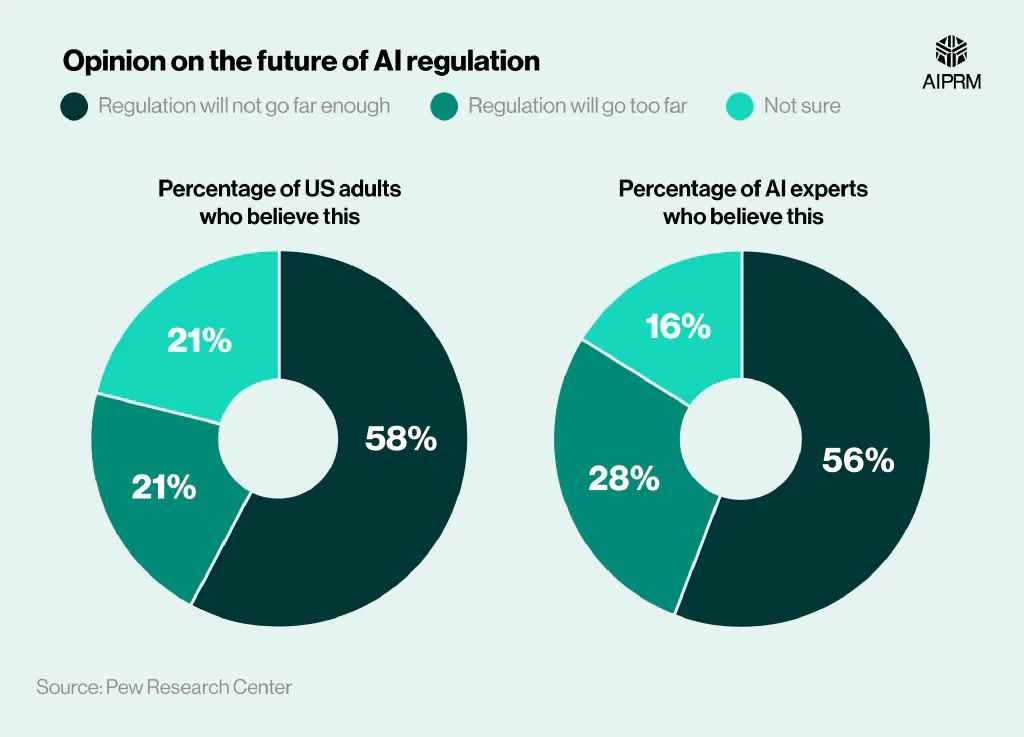

Opinions on the future of AI regulation among US adults and AI experts #

Analysis of AI bias statistics revealed that 58% of US adults believe AI regulation won’t go far enough, compared to 21% who believe that the technology will become overly-regulated.

By comparison, 56% of AI experts believe regulation will not go far enough, with 28% believing it will go too far. This suggests that AI experts are more skeptical of AI over-regulation than the general public.

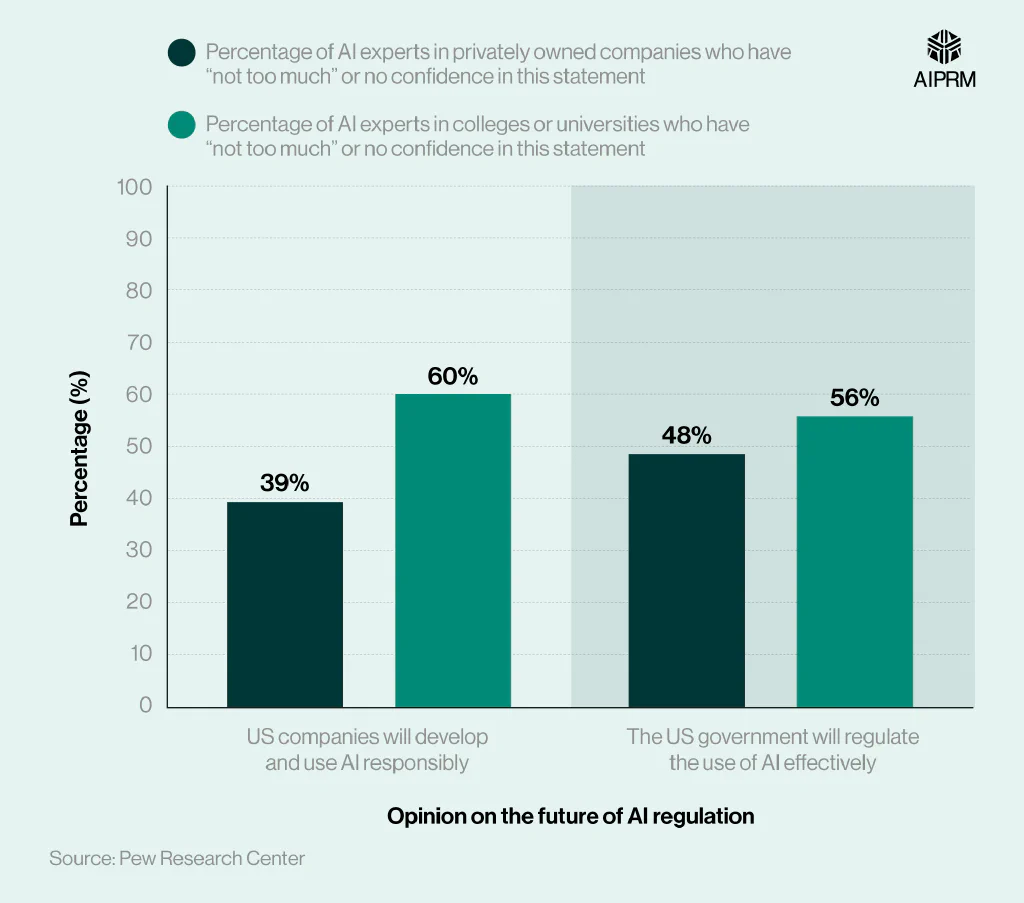

Opinions on the future of AI use among AI experts in private businesses and education #

Around two-fifths (39%) of AI experts at privately owned companies said they have either “no” or “not too much” confidence that US companies will develop and use AI responsibly. This number rose by 21 percentage points among experts at colleges or universities, suggesting greater skepticism among AI experts working in the education sector.

Similarly, just under half (48%) of AI experts at private companies have “no” or “not too much” confidence that the US government will regulate the use of AI effectively. This was eight percentage points lower than their peers at colleges or universities.

How to reduce bias in AI? #

With AI statistics finding that 56% of Americans regularly interact with AI, reducing bias is one of the key factors required to ensure the positive effects of artificial intelligence outweigh the negatives throughout society.

Achieving this goal will require a combination of technological development, algorithm modifications, government legislation, and responsible practices from product designers.

Below, we’ll break down some of the key requirements:

Human oversight #

Human oversight is key throughout every stage of AI development. The power of modern artificial intelligence programs means that the development of their output over time is unpredictable to everyone, including the engineers themselves.

Therefore, sufficient monitoring of what is included and excluded from the algorithm is key when designing a product or tool. Even once developed, regular monitoring is required to identify any areas of bias, inaccuracy, prejudice, or misinformation.

Whether it’s increasing the sample size of a dataset, diversifying the information sources, or removing harmful metrics, human oversight allows us to analyze the strengths and weaknesses of an AI model and to ensure it remains aligned with ethical and societal expectations.

Consistent research and development #

Even with responsible design and human oversight, technological limitations can result in undesired output from AI tools. While the constantly developing nature of the technology is enabling us to generate quality output more consistently than ever, it’s important that development continues in the coming years.

Investment into research and product development increases the likelihood of AI products improving quickly, and solving the systematic issues contributing to instances of bias or misinformation.

Government legislation #

The right government legislation is key to ensuring artificial intelligence is used ethically and responsibly. While many prominent AI organizations have shown commitment to ethical development, sufficient government legislation around AI bias and misinformation ensures that all platforms are duty-bound to remain committed to displaying these principles in their output.

While some instances of bias may be unavoidable as platforms continue to develop, having a core set of legal principles that companies can be held accountable for helps foster a culture of responsible AI development. Therefore, intelligent implementation of AI laws around the world is crucial to ensuring artificial intelligence develops in a way that cultivates fairness across society.

FAQs #

What is bias in AI?

Bias in AI refers to any instance of positive or negative subjectivity displayed in the output of an artificial intelligence tool, model, or software. Bias can manifest around a variety of issues, including:

- Gender

- Race

- Age

- Religion

- Socioeconomic status

- Background

- Nationality

- Sexuality

- Political views.

What is algorithmic bias in AI?

Algorithmic bias in AI refers to systematic errors within algorithms that result in unfair or unbalanced views and outcomes in the tools and systems trained on them. Whether intentional or accidental, these algorithmic errors can reduce an AI tool’s ability to produce objective and accurate information around certain subjects.

Is AI biased?

While AI is not inherently biased, factors such as algorithmic design, technological limitations, and human input can reduce the technology’s ability to produce objective responses in certain instances.

Therefore, a culture of responsibility, monitoring, and development is required to ensure bias in AI is kept to a minimum as it increases in prominence throughout society.

What are two types of bias that can occur in AI?

The two most common types of bias that can occur in AI are data bias and algorithmic bias. Data bias occurs when training data used to develop an AI system is incomplete, inaccurate, unrepresentative, or reflects existing societal stereotypes.

Algorithmic bias, on the other hand, occurs when the algorithm itself leads to unfair or subjective outcomes, even if the data is balanced. This can be due to flawed rules, incorrect assumptions, design choices, or system errors.

Which is an area in which AI systems can exhibit bias?

AI can exhibit bias in numerous areas, including race, gender, sexuality, background, nationality, and political beliefs. The prevalence of these biases can be limited with due care during the design process and consistent monitoring as a system’s output develops.

Glossary #

Algorithm #

Algorithms are sets of rules, principles, or processes to be followed in calculations and other problem-solving activities by a computer, program, or system.

ChatGPT #

ChatGPT is a prominent generative AI tool developed by OpenAI that uses large language models (LLMs) to generate human-like text responses to user requests.

Large Language Models (LLMs) #

LLMs are types of machine learning models trained on vast amounts of text data that can understand, process, and respond in human-like language.

Sources #

https://www.bbc.co.uk/news/articles/c4g8nxrk207o

https://www.foxnews.com/media/chatgpt-combat-bias-new-measures-put-forth-openai

https://blog.hubspot.com/marketing/ai-bias

https://arxiv.org/pdf/2301.01768

https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-025-03118-0

https://www.washington.edu/news/2024/10/31/ai-bias-resume-screening-race-gender/

https://www.theguardian.com/technology/2024/mar/16/ai-racism-chatgpt-gemini-bias

https://arxiv.org/pdf/2403.00742

https://www.science.org/doi/10.1126/science.aax2342

https://hdsr.mitpress.mit.edu/pub/qh3dbdm9/release/2

https://www.nature.com/articles/s41599-025-04465-z

https://www.next-up.com/insights/ageism-in-hiring-how-ai-is-crushing-talent-over-50/